About me

I have completed my Ph.D. at the Image and Visual Representation Lab in EPFL, Lausanne, Switzerland. I am simply grateful to have been mentored by the likes of Prof. Sabine Süsstrunk, Dr. Mathieu Salzmann, Prof. Pascal Fua and Prof. Anand Paul . I love to work on interdisciplinary subjects, currently applying deep learning to reconfigure 19th to 20th century comics to digital media. I have also worked with satellites and with the evolutionary intelligence mechanism of bilogical plants (during my Masters).

I am passionate about learning, teaching and sharing my ideas about artificial intelligence and machine learning.

I volunteer for Teach for India and EduCare (a non-profit initiative for teaching children and empowering girls). I am also actively supporting and volunteering as a mentor at India STEM Foundation where I host workshops and mentor teams. Through these efforts, I aim to bring STEM to communities inclusive of all representations- gender, sexual orientations, nationality, race etc.

News

Organiser of Women in Computer Vision Workshop (WiCV) at CVPR 2024, Seattle.

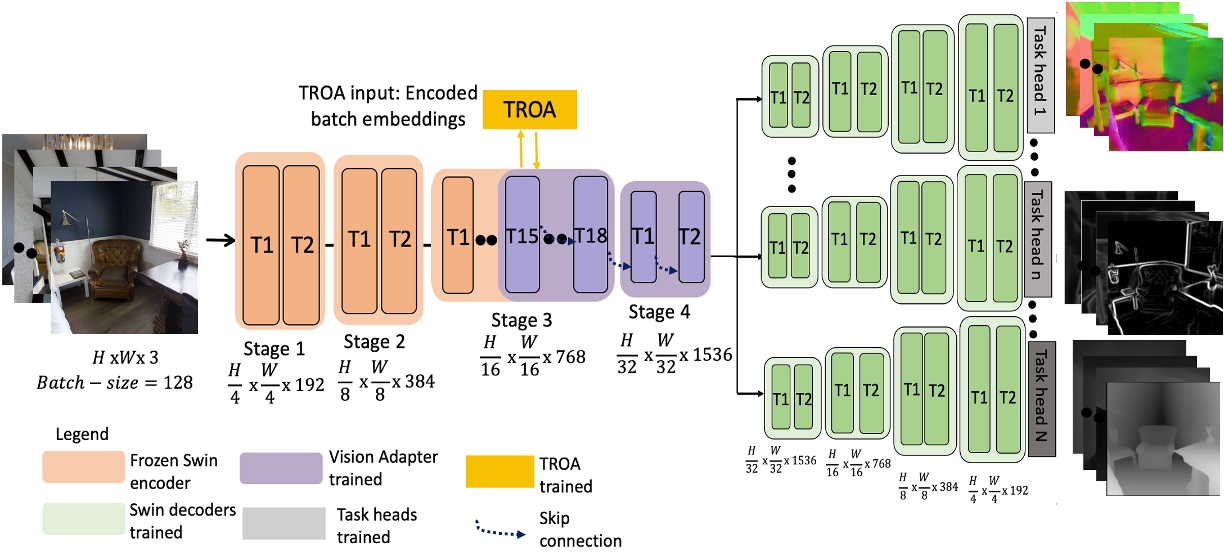

Accepted work to ICCV 2023, Paris- Vision Transformer Adapters for Generalizable Multitask Learning.

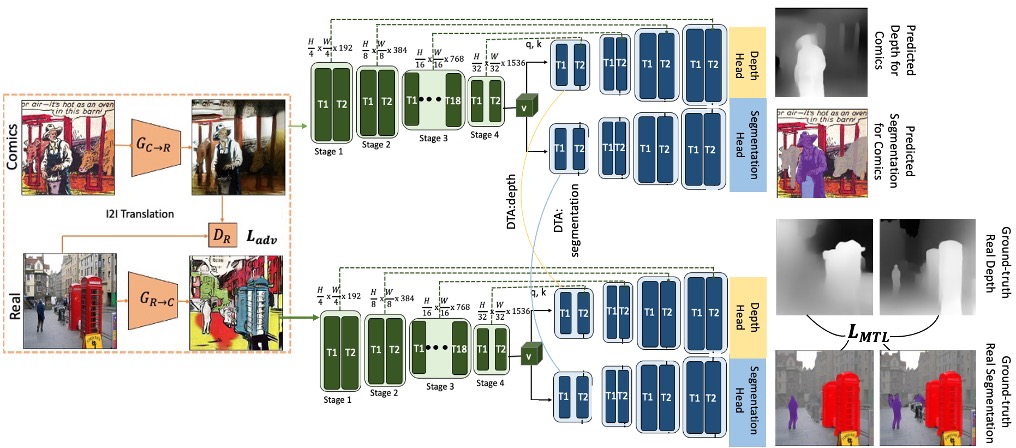

Accepted work to CVPR 2023 proceedings, Vancouver- Dense Multitask Learning to Reconfigure Comics.

Reviewer for CVPR 2024 and 2023, ECCV 2024, ACCV 2024, ICCV 2023, WACV 2023 and 2024.

Successfully defended my Ph.D. thesis in Computer Science at EPFL. Jury comprised Prof. Andrew Zisserman (Oxford, Deepmind), Prof. Sara Beery (MIT, Google), Prof. Wenzel Jakob (EPFL), Prof. Pascal Fua (EPFL), Prof. Ed Bugnion (EPFL), and Prof. Mathieu Salzmann (EPFL).

Awarded the Teaching Assistant Award 2022 for teaching excellence in Computational Photography by EPFL.

Accepted work to Transactions on Machine Learning Research (TMLR 2022)- Modeling Object Dissimilarity for Deep Saliency Prediction.

Accepted work to CVPR 2022, New Orleans- MulT: An End-to-End Multitask Learning Transformer.

Accepted work to WACV 2022, Hawaii- Estimating Image Depth in the Comics Domain.

Accepted work to Signal Processing Letters 2021- Fidelity Estimation Improves Noisy-Image Classification with Pretrained Networks.

I am honoured to take part in the podcast Deep Learning for Machine Vision with 15K+ views.

I am honoured to take part in the podcast Solving an Optimization Problem with a Custom Built Algorithm with 10K+ views.

Publications

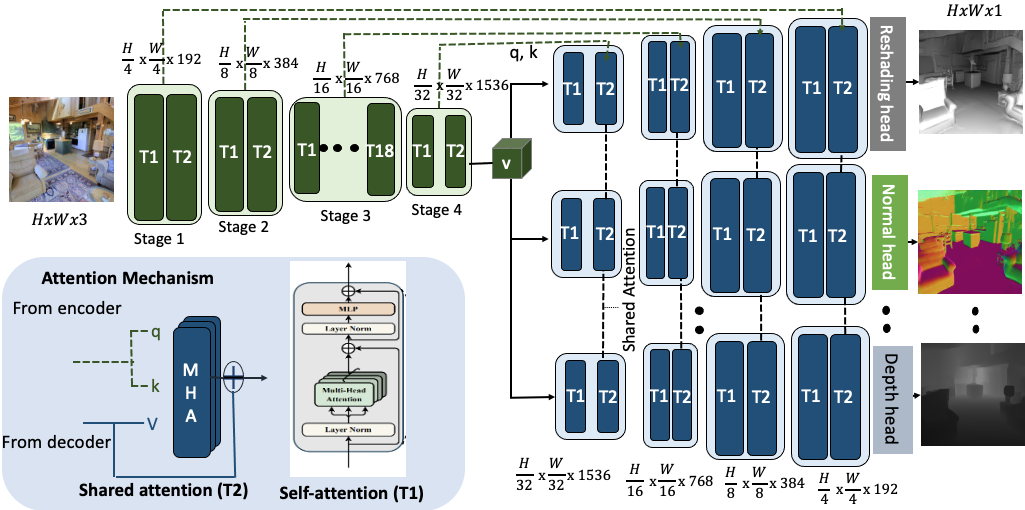

Vision Transformer Adapters for Generalizable Multitask Learning

Dense Multitask Learning To Reconfigure Comics

MulT: An End-to-End Multitask Learning Transformer

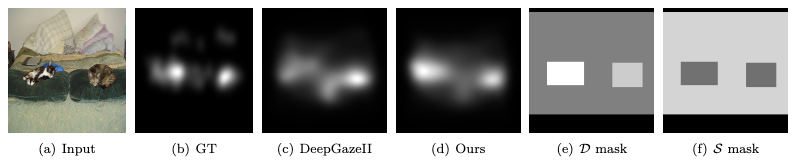

Modeling Object Dissimilarity for Deep Saliency Prediction

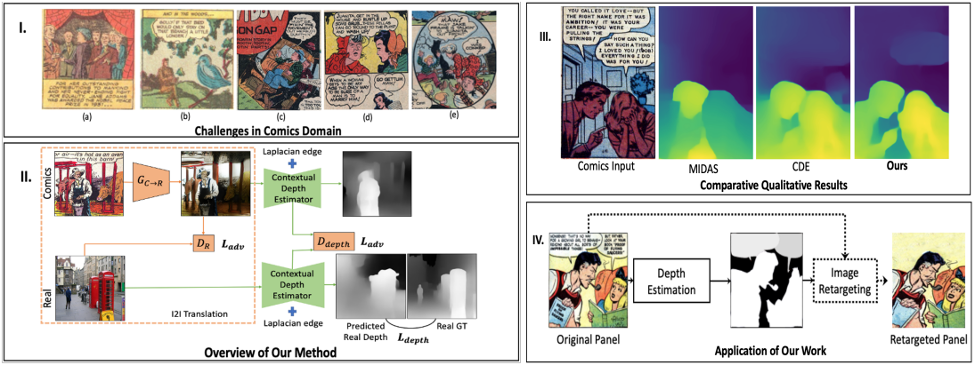

Estimating Image Depth in the Comics Domain

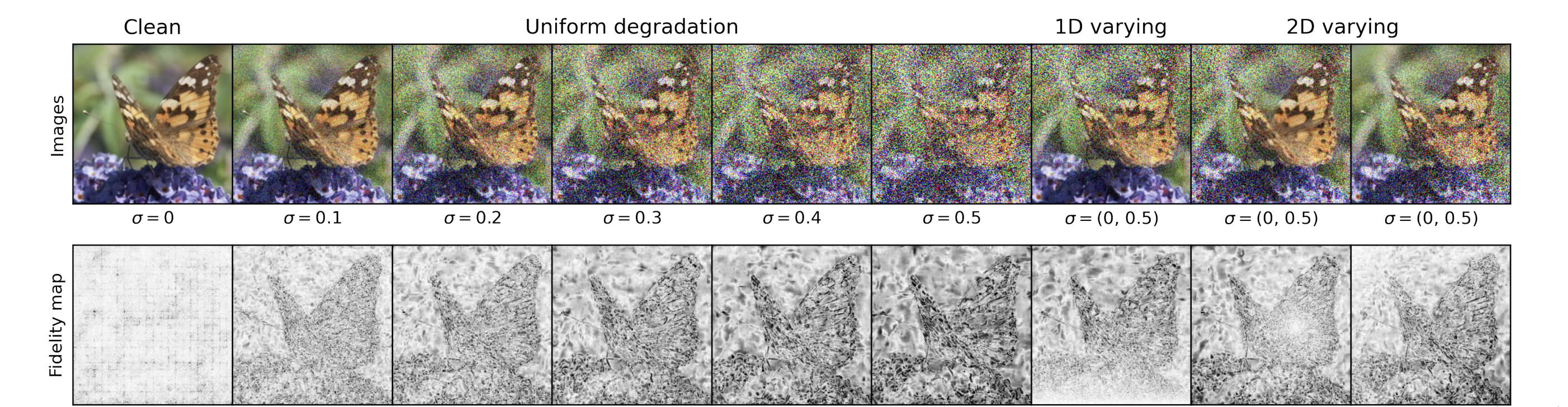

Fidelity Estimation Improves Noisy-Image Classification With Pretrained Networks

DUNIT- Detection based Unsupervised Image to Image Translation

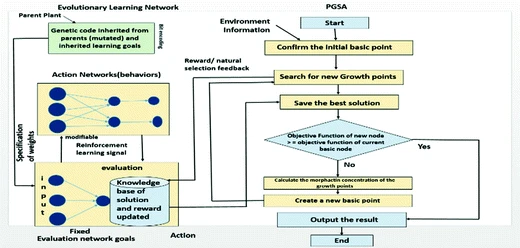

Image Analysis using a novel learning algorithm based on Plant Intelligence

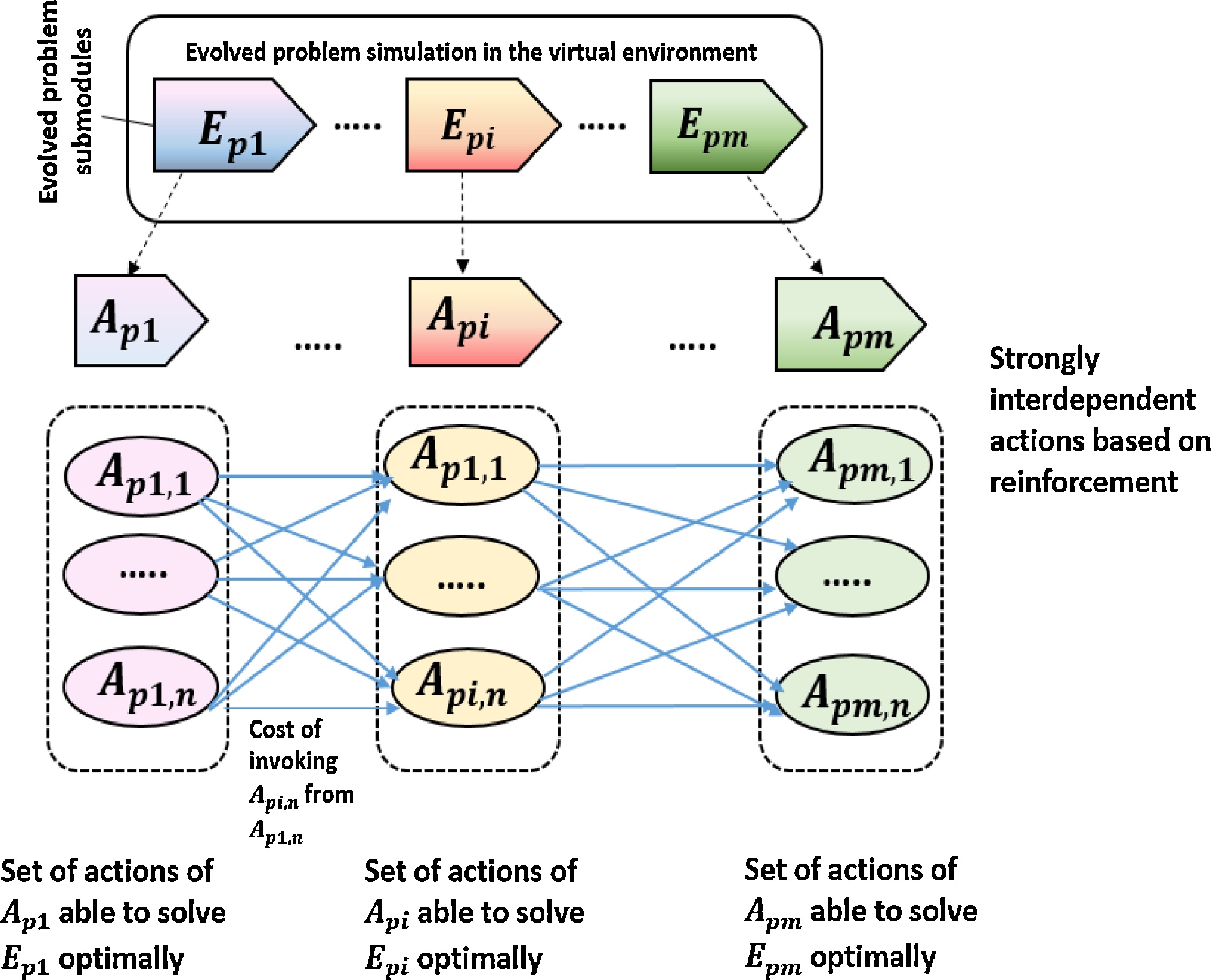

An Immersive Learning Model Using Evolutionary Learning

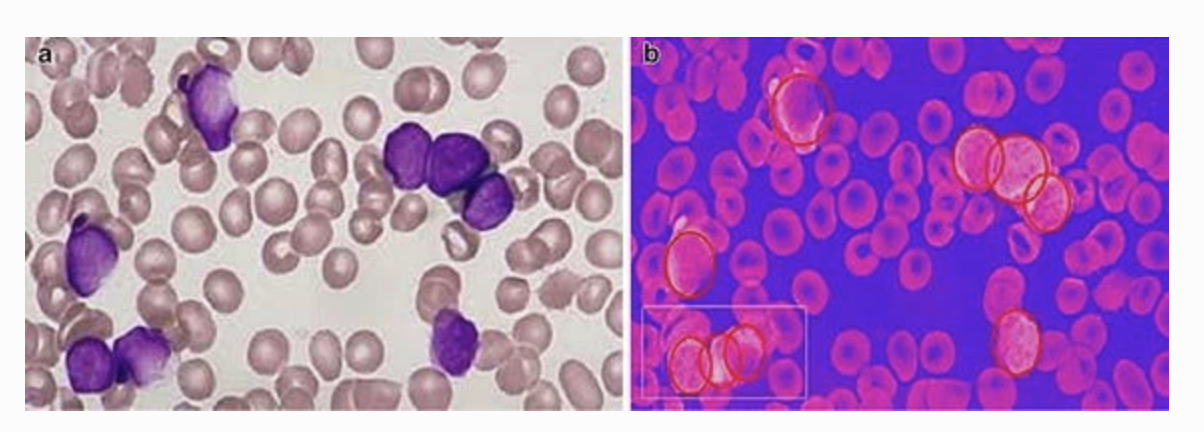

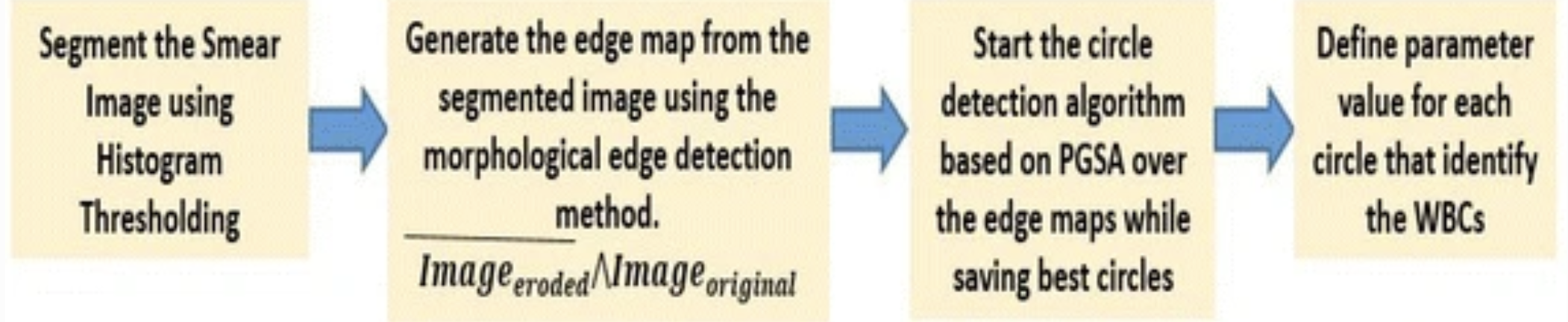

A Leukocyte Detection technique in Blood Smear Images using Plant Growth Simulation Algorithm

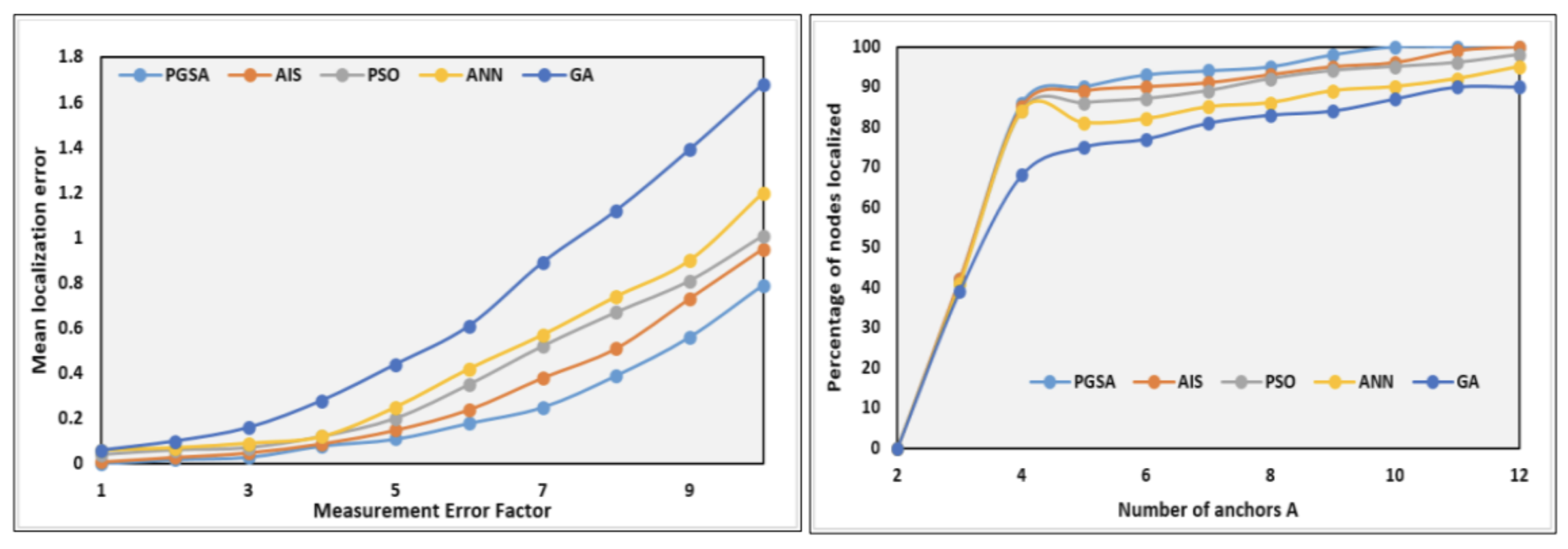

A Hybrid Search Optimization Technique Based on Evolutionary Learning in Plants

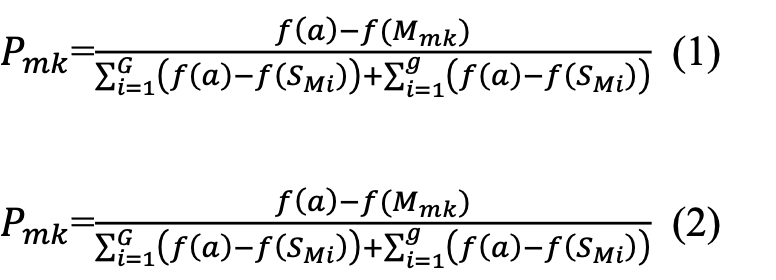

An object localization optimization technique in medical images using plant growth simulation algorithm

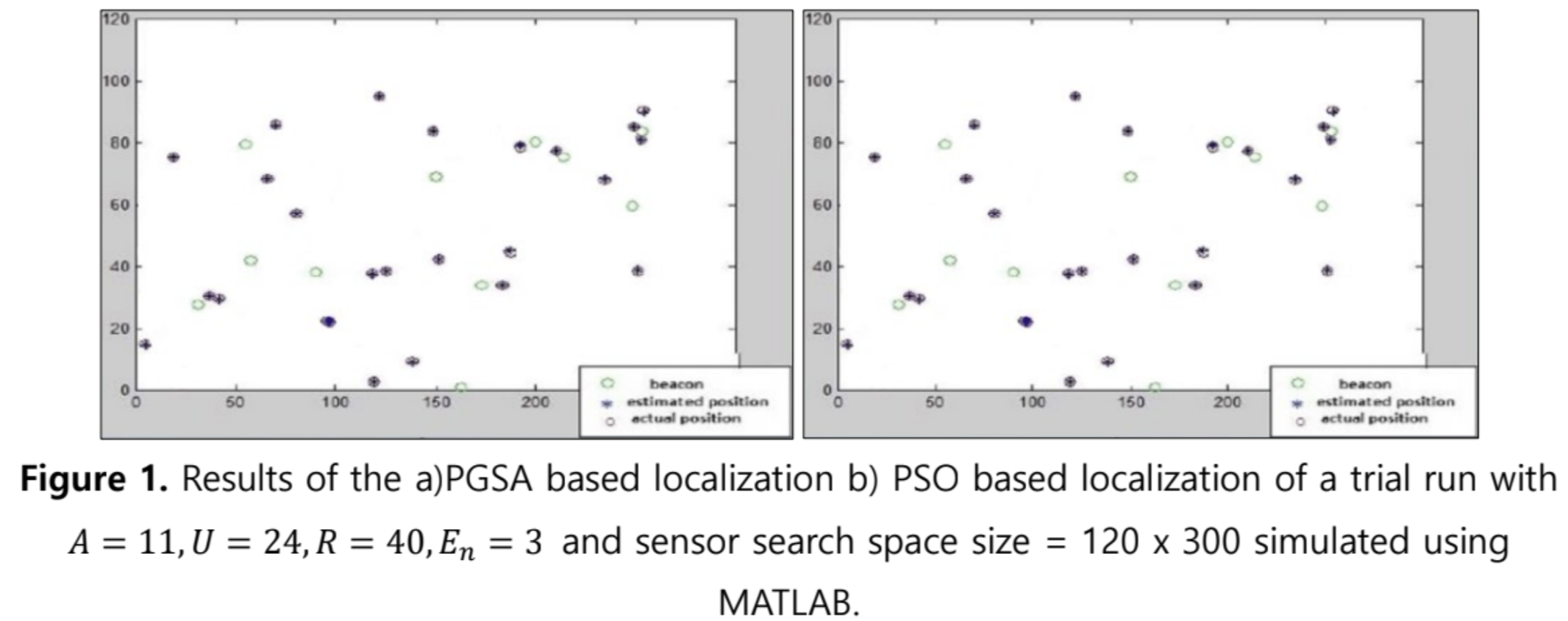

Autonomous Terrestrial Image Segmentation and Sensor Node Localization for Disaster Management using Plant Growth Simulation Algorithm

Evolutionary Reinforcement Learning based Search Optimization

Adaptive Transcursive Algorithm for Depth Estimation in Deep Learning Networks

Talks

- Poster presentation at CVPR 2022 on Multitask Learning in Computer Vision, New Orleans, USA, 2022.

- Invited talk on the Evolution of Computer Vision in recent years: Convolutional Neural Network to Transformers and Self-supervised Learning at Synapse, Milan, 2022.

- Oral and poster presentation at WACV 2022 on Learning Image Depth in Comics Domain, Hawaii, USA, 2022.

- Poster presentation at CVPR 2020 on Detection based Unsupervised Image to Image Translation, Seattle, USA, 2020.

- Invited speaker about Future Trends in Deep Learning, at International Conference on Data Science and Big Data Analytics, on May 24-25, 2018 in Toronto, Canada.

- Poster presentation at WiML (Women in Machine Learning) workshop, Long Beach, California, USA, 2017.

- Advances in Deep Learning, CiTE, Samsung Intelligent Media Research Centre,Postech, South Korea, 2017.

- Oral presentation at AAAI about Plant Intelligence and how it can be used to optimize Machine Learning, San Francisco, USA, 2017.

- Oral presentation at ICSI, Bali, Indonesia, 2016.

- Oral presentation and poster presentation at ACM SAC, Italy, 2016.

- Talk at Platcon, Jeju, South Korea, 2017.

- Departmental Talk on Vision, Deep Learning and Optimization, Kyungpook National University 2016.

- Oral presentation at Computer Science and Engineering department, Kyungpook National University, 2015.

- Oral presentation at the project grant proposal meetings, Daegu, South Korea, 2015-2017.

Academic Services

Reviewer of Machine Learning, Signal Processing and IoT journals and conferences:

- Reviewer of proceedings of IEEE/ CVF Winter Conference on Applications of Computere Vision, WACV.

- Reviewer of proceedings of IEEE Women in Machine Learning.

- Reviewer of IEEE Transactions on Signal and Information Processing.

- Reviewer of Elsevier Computers And Electrical Engineering .

- Reviewer of IEEE Intelligent Transport Systems [invited].

- Reviewer of Thomas and Francis Behaviour and Information Technology.

- Reviewer for Springer Plus Journal.

- Reviewer of IEEE Transactions on Emerging Topics in Computing.

- Reviewer of Springer Cluster Computing- The Journal of Networks, Software Tools and Applications.

- Reviewer of proceedings of ACM SAC 2016, 2017.

Teaching and Research Supervision:

- Supervised 2 successful Master Theses at EPFL on image translation and dense multi-task learning. Past students are working at Google.

- Supervised 5 research projects leading to 3 publications.

- Head teaching assistant for Computational Photography CS-413 at EPFL.

Member of organizations:

- Member of Association for the Advancement of Artificial Intelligence (AAAI).

- Member of International Machine Learning Society (IMLS).

- Member of Computer Society of India.

- Member of Association for Computing Machinery.

AWARDS

- Swiss National Science Foundation Sinergia Grant, Switzerland 2019-2023.

- Women in Machine Learning (WIML) Grant, 2017.

- ACM SAC SRC best student paper nomination -top 5 globally, 2016.

- ACM SIGAPP Travel Award, Italy, 2016.

- KNU International Student Ambassador 2017- present.

- Brain Korea 21 Plus grant for research, Kyungpook National University, awarded to top 1% of the applicants in Department of Computer Science Engineering 2015-2017.

- Awarded full merit scholarship by Kyungpook National University, 2015-2017 (4 Semesters).

- Christ University Merit Scholarship - all 8 semesters, 2011-2015. Dean's List.

- MS Artificial Intelligence, Offer of Study, from New York University, 2015.

- MBA Business Analytics, Offer of Study, from University of Tampa, Florida, USA, 2015.

- BS Computer Science Engineering (transfer), Offer of Study, from University of Rochester, USA 2013.

- Best Overall Performer of the Year 2012 of all undergraduate and postgraduate students, Christ University.

- Runner-up International Science Debate Competition by Quanta, November 2009.

- Won Gold medal and ranked 1st in National Cyber Olympiad in India, 2005.

- Awarded Distinction in Macmillan International Assessment, University of New South Wales, Australia, 2004-2006.

Outreach

-

Sponsored and Mentored a team of middle school children for the First Lego League in India. Click to know more.

Volunteered as a Mentor at the Robo Siksha Kendra (the Non-profit Robotics school of India STEM Foundation) event for encouraging kids specially girls in STEM and AI. Click to know more

Organized a preparation workshop on Computer Science for International Robotics Competition at India STEM Foundation. Attended by 350 children aged between 6-12 years. Mentored an all-girls team for the International Robotics Competition.

Further Work

- 3D reconstruction of objects in comics domain: A work done as part of the Swiss National Science Foundation Sinergia project. Video link